NPTEL Introduction To Machine Learning Week 5 Assignment Solutions

NPTEL Introduction To Machine Learning Week 5 Assignment Answer 2023

1. The perceptron learning algorithm is primarily designed for:

- Regression tasks

- Unsupervised learning

- Clustering tasks

- Linearly separable classification tasks

- Non-linear classification tasks

Answer :- For Answer Click Here

2. The last layer of ANN is linear for and softmax for .

- Regression, Regression

- Classification, Classification

- Regression, Classification

- Classification, Regression

Answer :- For Answer Click Here

3. Consider the following statement and answer True/False with corresponding reason:

The class outputs of a classification problem with a ANN cannot be treated independently.

- True. Due to cross-entropy loss function

- True. Due to softmax activation

- False. This is the case for regression with single output

- False. This is the case for regression with multiple outputs

Answer :- For Answer Click Here

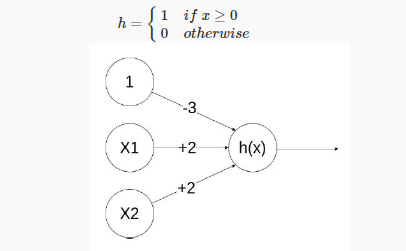

4. Given below is a simple ANN with 2 inputs X1,X2∈{0,1} and edge weights −3,+2,+2

Which of the following logical functions does it compute?

- XOR

- NOR

- NAND

- AND

Answer :- For Answer Click Here

5. Using the notations used in class, evaluate the value of the neural network with a 3-3-1 architecture (2-dimensional input with 1 node for the bias term in both the layers). The parameters are as follows

Using sigmoid function as the activation functions at both the layers, the output of the network for an input of (0.8, 0.7) will be (up to 4 decimal places)

- 0.7275

- 0.0217

- 0.2958

- 0.8213

- 0.7291

- 0.8414

- 0.1760

- 0.7552

- 0.9442

- None of these

Answer :- For Answer Click Here

6. If the step size in gradient descent is too large, what can happen?

- Overfitting

- The model will not converge

- We can reach maxima instead of minima

- None of the above

Answer :- For Answer Click Here

7. On different initializations of your neural network, you get significantly different values of loss. What could be the reason for this?

- Overfitting

- Some problem in the architecture

- Incorrect activation function

- Multiple local minima

Answer :- For Answer Click Here

8. The likelihood L(θ|X) is given by:

- P(θ|X)

- P(X|θ)

- P(X).P(θ)

- P(θ)P(X)

Answer :- For Answer Click Here

9. Why is proper initialization of neural network weights important?

- To ensure faster convergence during training

- To prevent overfitting

- To increase the model’s capacity

- Initialization doesn’t significantly affect network performance

- To minimize the number of layers in the network

Answer :- For Answer Click Here

10. Which of these are limitations of the backpropagation algorithm?

- It requires error function to be differentiable

- It requires activation function to be differentiable

- The ith layer cannot be updated before the update of layer i+1 is complete

- All of the above

- (a) and (b) only

- None of these

Answer :- For Answer Click Here

| Course Name | Introduction To Machine Learning |

| Category | NPTEL Assignment Answer |

| Home | Click Here |

| Join Us on Telegram | Click Here |

You must be logged in to post a comment.